It's been late 3 am PST here in California. The weather’s quite breezy, soothing, and romantic and meanwhile, here I’m having a hot coffee in front of my laptop completing assignments. Here’s my second article! Do comment if any improvisations are to be done or if I need to change the article title.

I just created a lucid prompt above. Bazinga!

Imagine you are having prom night and there you get a chance to confess publicly. Now the phrases or prompts you use to convey matter a lot that how effectively, persuasively, and lucidly you speak. This sounds quite dramatic something around the 2000s but this is the era where prompts have again begun to play a significant role in our lives. Recent breakthroughs in technology and specifically, artificial intelligence have been ensuring the best, most efficient, and robust AI models by designing and crafting deeply wired intriguing neural network architectures. However, this reliability isn't just because of the model but the quality of the data with which it was trained.

Why is Prompt Engineering So Important for AI Models?

So, to understand the importance of prompt engineering, let’s first consider how AI models function. AI models, such as language models or bots, learn from the data they are fed. The quality of the data plays a vital role in determining the accuracy of the AI model.

However, the data provided to the AI models is usually incomplete or biased. To address this issue, prompt engineering comes into play. Effective prompt engineering can help AI models to learn from high-quality data and reduce bias in the learning process. For instance, when it comes to language models like LLMs, the prompts used to train them can significantly affect the model’s output. If the prompts are too general or not tailored to the specific task at hand, the model may struggle to produce accurate responses. Conversely, if the prompts are too complex or too narrow, the model may struggle to learn from them.

Prompt: Cool right! Now lets follow our tradition and have a definition of this term as well.

Prompt engineering is a comprehensive process that involves deliberate and systematic designing, refining and crafting lucid, concise and compelling prompts that drive desired outcomes, and underlying data structures to manipulate AI systems towards achieving specific and desired outputs. Phew! Too much to handle. Let me get it straight —

Prompt engineering is just a fancy way of saying text you give to a Large Language Model (LLM), like GPT-3 or GPT-3.5, with their massive 175 billion parameters, to get a specific result.

Yet, that being said it is much more than just “write a blog post on…”. It is a sophisticated and nuanced discipline that requires a thorough understanding of the underlying principles and approaches that drive effective prompt design. For example, if you ask ChatGPT to give you five Blog Title Ideas about “Travelling to Hawaii,” your text is prompt, and the response you get from ChatGPT is the result. It’s that simple!

The Future of Human-AI Interaction Lies in Prompt Engineering

But what if the results aren’t what you expected? That’s where prompt engineering comes in. Prompt engineering is a field that emerged out of necessity as the creators of AI models and systems struggled to effectively communicate with their creations. Initially, even the creators of these AI models and systems did not know how to get them effectively to produce desired outputs.

Prompt: Microsoft recently hosted JARVIS aka HuggingGPT on HuggingFace. Try it now!!

After getting the nuances of what prompt engineering is and its significance now and in the coming future, now let's dive into what are prompts and prompting in AI

Lets get Prompting!!

This involves creating the right prompt or command that the AI system needs to respond to. The prompt should be designed in a way that the AI system can understand it and generate a relevant output. So, in general, these are the main types of — a)Standard Prompting, b) Role Prompting, c)Few Shot Prompting, d) Chain of Thoughts, e)

source: https://learnprompting.org/docs/basics/roles

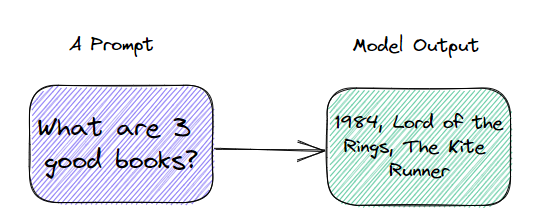

A. Standard Prompting: It basically provides a prompt without any reference or an example. For example: Which city is most densely populated? and damn! you will get your answer:) Also, Few shot standard prompts are just standard prompts that have exemplars in them. Exemplars are examples of the task that the prompt is trying to solve, which are included in the prompt itself.

source: https://learnprompting.org/docs/basics/roles

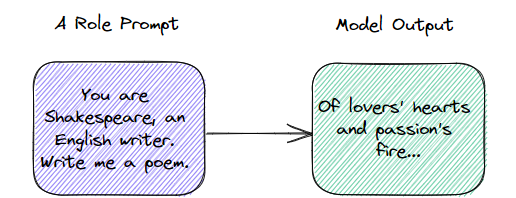

B. Role Prompting: Now, observe the above image and how did the prompt start. This prompting technique is to assign a role to the AI. For example, the prompting technique is to assign a role to the AI. For example, “You are an expert in writing blog articles that goes viral. I want you to write a blog post that will make people want to click and read the article.” Notice how I start the prompt by assigning a role to ChatGPT (You are an expert in writing blog articles). This is called Role Prompting.

https://learnprompting.org/docs/basics/few_shot

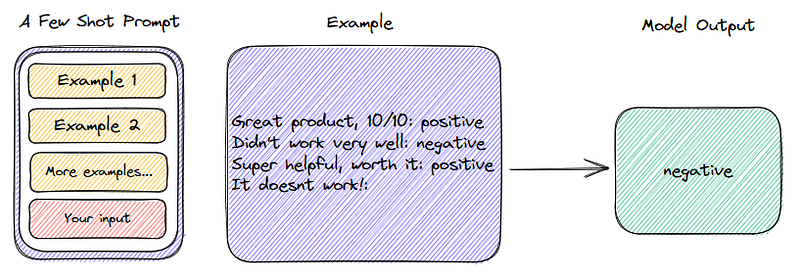

C. Few Shot Prompting: An exciting technique encourages the LLM to explain its reasoning by showing a few shots or examples.

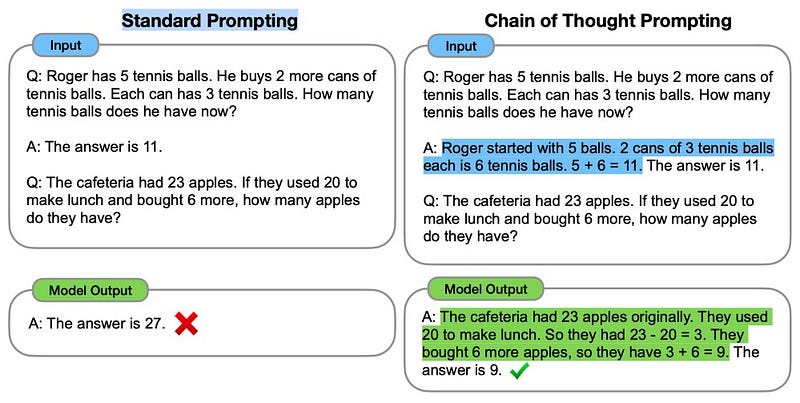

D. Chain of Thoughts: A prompting strategy that encourages the LLM to explain its reasoning. The above image shows a few-shot standard prompt (left) compared to a chain of thought prompt (right). The main idea of CoT is that by showing the LLM some few shot exemplars where the reasoning process is explained in the exemplars, the LLM will also show the reasoning process when answering the prompt. This explanation of reasoning often leads to more accurate results.

Now that we understand the importance of prompt engineering and types of prompts, let’s explore some of the best practices for effective prompt engineering:

Understand the data: Before creating prompts, it is essential to understand the data you are working with. Analyze the data to identify any biases or gaps that need to be addressed.

Keep it simple: Overly complex prompts can confuse AI models and negatively impact their performance. Keep your prompts simple and straightforward.

Be specific: Tailor your prompts to the specific task at hand. For instance, when training an LLM to summarize text, use prompts that focus on summarization.

Use diverse prompts: Provide a variety of prompts to your AI model to ensure it learns from a diverse range of data.

Monitor and adjust: Regularly monitor your AI model’s performance and adjust prompts as necessary to improve accuracy.

End Note!

In conclusion, prompt engineering is a crucial aspect of ensuring the accuracy and efficiency of AI models. By understanding the best practices for prompt engineering and tailoring prompts to the specific task at hand, you can help your AI models learn from high-quality data and reduce bias in the learning process. So, start incorporating these best practices into your prompt engineering process and take your AI models to the next level!

References!

1. https://learnprompting.org/

2. https://www.promptengineering.org/what-is-prompt-engineering/